The nutritional claims we see reported in popular media often seem contradictory.

We learn that coffee is bad for you. Then 10 years later, we find that it’s actually good. In rapid succession, wine has alternated between being good and bad. Skipping breakfast is shown in one study to be a factor in obesity, and in another, it’s not.

For at least 50 years, these changes—reflected in the changing American government dietary guidelines—were happening within the scientific community as well.

The United States Department of Agriculture’s 1990 guidelines were to avoid cholesterol altogether. Today, there is no limit. The 1990 guidelines also recommended avoiding saturated fats (leading to the widespread adoption of highly processed trans fats). Today, it’s changed to be about 10% of a person’s daily caloric intake.

Many of the policies and behaviors that helped create the obesity epidemic came from a mindset of scarcity, that we needed to get as many calories into people as quickly as possible. Now that we have too much, that mindset has been slow to change.

Despite a 25% increase in added sugars since 1970 in the U.S. and the practice of adding high-fructose corn syrup to almost everything in the 1980s and 90s, the words “added sugar” weren’t included until 2000.

This lack of constancy confuses the public, and not only creates opportunities for spurious nutritional claims, but also cheapens nutritional science in the eyes of many. Now that dieticians have a more complete understanding of what constitutes healthy food choices and the enormous role that diet plays in our well being, it’s time to restore trust in the field. That begins by understanding how we got here in the first place.

To get some help exploring what is behind these shifts in nutritional science, we turned to Dr. Dustyn Williams, M.D., lead instructor and co-founder of the medical education platform OnlineMedEd.

How did we get here?

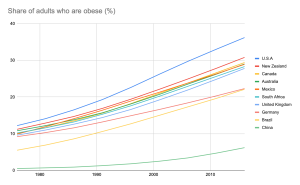

Obesity rates have skyrocketed worldwide and aren’t slowing down. In the U.S.—the most extreme example in a global trend— adult obesity rates have grown from 11.9% in 1975 to 42.4% in 2020. In 1990, no U.S. state had prevalence of obesity greater than or equal to 15%. By 2010, no state in the U.S. had an obesity rate under 20%, and it’s only gotten worse since. Today, only Colorado and the District of Columbia have obesity rates under 25%.

Clearly, all this public uncertainty around nutrition has consequences. Reasons behind this confusion are numerous and complex, but can be distilled down to how the government and food industry responded to two interrelated factors: rapid population growth in the U.S. in the second half of the 20th century, and the unreliable conclusions that can result from how nutritional science is often tested.

Rapid postwar change in the U.S.: from famine to feast

From the Great Depression to the mid 1970s, the big nutritional concern in the U.S. was that Americans—particularly the most vulnerable—weren’t getting enough of it.

The food industry responded to this need by developing highly processed and calorie-packed foods that could be shipped anywhere and sit on a pantry or store shelf for longer—foods like white breads, canned legumes and vegetables, and anything packaged with excessive amounts of sugar, sodium, or preservatives.

Coupled with the development of better shipping technology and refrigeration, people finally had access to calories. Too many calories of the wrong kind, it would turn out.

The U.S. government addressed malnutrition concerns through several programs. The most prominent was the Special Supplemental Nutrition Program for Women, Infants, and Children (WIC), which began in 1972.

To explain how development and programs that sought to address nutritional challenges ended up making things worse, Dr. Williams walked me through it.

“[The WIC]’s early thinking was based around a daily macronutrient percentage—this percentage should be carbs, this percentage should be fat, this percentage should be protein—based on data that couldn’t be reproduced,” Dr. Williams says.

Coupled with the development of better shipping technology and refrigeration, people finally had access to calories. Too many calories of the wrong kind, it would turn out.

This mindset of macronutrient percentages meant that prior to 1980, a box of sugary cereal counted just as much as an apple or carrot to meet these figures and was seen an acceptable food choice as far as WIC was concerned.

“Compounded by the fact that these underserved communities often have low access to good foods, the result was that kids were being fed ultra-processed foods and added sugars that ended up making them obese.”

“There’s been a huge shift from this macronutrient percentage understanding, like the food pyramid saying you need this much fat, this much carbs, etc.; basically, everything we did from 1970 to 2015, to dietary patterns,” Dr. Williams continues.

“This means it doesn’t matter how much of each macronutrient you get, what matters is the source you’re getting the nutrients from.”

In 2009, the WIC made major revisions to address rising rates of obesity and diabetes, aligning with this new kind of nutritional thinking. The effects of these changes underscored the critical role nutrition plays in our health and wellbeing throughout our lives. We’ll write more about these changes and their effects in Part II.

Many of the policies and behaviors that helped create the obesity epidemic came from a mindset of scarcity, that we needed to get as many calories into people as quickly as possible. Now that we have too much, that mindset has been slow to change

Referencing a 19-year study of children born during the nutritionally lean Dutch Hunger Winter of 1944–1945, wherein for 6 months, rations were cut down to 500 kcal/day for all people, including pregnant mothers, Dr. Williams spoke about how too many calories can be even more dangerous than not enough of them.

The study’s mothers were malnourished and had underweight babies. Yet, when tested again at 19 as part of a compulsory medical evaluation to join the Dutch military, their male children showed no differences—physical, mental, or otherwise—from children that weren’t born under famine.

“While we know that malnutrition can adversely affect fertility and that vitamin deficiencies can cause birth defects and miscarriages in the first trimester, this study shows that, for fetuses that make it to the second and third trimesters without birth defects, malnourishment matters a lot less than obesity during pregnancy,” Dr. Williams says.

“The placenta is really good at stealing nutrients from mom. So while the mother was famished, the fetus was trucking along.”

For the children of obese mothers, on the other hand, the story is quite different.

“There’s a two year window that’s not really well understood, from the time the egg is fertilized to the time you turn two years old, where your number of adipocytes is determined,” Dr. Williams says.

“After that point, the number doesn’t change. This means that the only way to add fat is to add more triglycerides—a type of fat found in your blood—to the cells that are already there, making them bigger, which, in turn, makes the person bigger.”

With the number of adipocytes set, breaking this cycle of obesity from mother to child can be very difficult.

The way nutritional science is tested

Like blind or double-blind trials that take place over long stretches of time, a lot of the more rigorous methods medical science uses to test the efficacy of a new drug or treatment are incredibly difficult for nutritional studies.

“When we start to talk about, ‘how long do you live on this diet?’ or whether you get [a] cancer or stroke, that is not so easy to study in humans,” Dr. David B. Allison, a biostatistician and psychologist with the University of Alabama-Birmingham, told the Washington Post in 2015.

“You need large numbers of people to eat what you tell them for a very long period of time. Typically, you want thousands of people over a period of years. You can immediately see that if you can get them to do it at all, could you even afford to do the study? Those kinds of studies are very rare.”

… it doesn’t matter how much of each macronutrient you get, what matters is the source you’re getting the nutrients from.

But nutritional science is a science nonetheless, and hypotheses need to be tested. To do this, we sacrifice the perfect for what is possible and hope that, over time, we’ll have enough data.

For nutrition, this means relying on studies and data that are easier to carry out, such as observational studies and food diaries. Asking people to report what they eat and then monitoring their health over time is a much more realistic test, but it is also more likely to yield misleading, incomplete, or even false conclusions.

Over time, as methods have improved and our understanding has grown, more studies have been performed with enough scientific rigor to be taken seriously.

The Dutch Hunger study and its seemingly counterintuitive conclusions is one such study. Kidney Disease Improving Global Outcomes (KDIGO), which reviewed nutritional literature on patients with chronic kidney disease and bone mineral disorder, is another. That study concluded that a diet with more plants, more organic material, less processing, and less animal protein improved outcomes.

So now what?

There have been plenty of signs in the last decade that we’re finally starting to come around to healthier nutritional patterns and mindsets. Dietary recommendations made by the U.S. government have changed considerably in recent years, and what we’re starting to see from these changes has been very promising.

In part II, we’ll continue our conversation with Dr. Williams, take a look at the results of these changes, and try to lay down a few nutritional guidelines that patients—who are understandably unsure about what’s real and what’s noise—can easily follow.